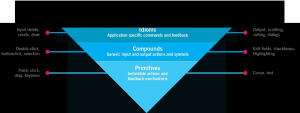

User Computer Interaction / Human–computer interaction

The interaction between user and computer is made through the interfaces. There are several ways of user computer interaction. The study of these different ways to help to improve user computer interaction by improving the usability of computer interfaces.

Mouse

A mouse is a pointing device that is most widely used and is omnipresent. The light pen was the first computer pointing device. It was a perfect tool for direct manipulation but was completely unusable with computers. No matter how precise our finger motions are, they drift unless we provide our hand with a firm foundation. With the use of styles too, it is difficult to make precise movements on a vertical surface with our arm and hand in the air, unsupported. The light pen cannot give us the precision we expect for accurate pointing.

Indirect Manipulation

When we roll the mouse around, we see a visual symbol, the cursor, move around on the video screen in the same way. The cursor moves accordingly with the movement of the mouse to the left or right. When using a mouse for the first time, we immediately get the sensation that the mouse and cursor are connected, a sensation that is extremely easy to learn and equally hard to forget. This is good because perceiving how the mouse works by inspection is nearly impossible.

The mouse has no visual affordance that it is pointing to the device until someone shows how it works. All idioms must be learned. Good idioms need only be learned once, and the mouse is certainly a good idiom. The motion of the mouse is proportional. With the heel of our hand resting firmly on the tabletop, our fingers can move with great accuracy. Although we use the term “direct manipulation” when we talk about pointing and moving things with the mouse, we are manipulating these things indirectly. A light pen points directly to the screen and can move properly be called a direct manipulation tool because we point to the object. With the mouse, however, we are only manipulating a mouse on the desk, not the object on the screen.

Mouse Buttons

1. Left Mouse Button

2. Middle Mouse Button

3. Right Mouse Button

The left mouse is used for all of the major direct manipulation functions of triggering controls, making selections, drawing, etc. The most common meaning of the left mouse button is activation and selection. The right mouse was used for extra, optional, or advanced functions. Microsoft stated right mouse between as the “shortcut” button in windows 3.x “shortcut” restated their position in the windows 95 style guide, attributing to the right between “context-specific actions” (a way to say “properties”) Application vendors can’t depend on the presence of a middle mouse button. Microsoft in its style guide, states that the middle button should be assigned to operations or functions already in the interface.

Mouse Events:

1. Point(Point)

2. Point, click, release (click)

3. Point, click, drag, release (click- and – drag)

4. Point, click, release, click, release (Double – click)

5. Point, click, click another button release (chord – click)

6. Point, click, release, click, release (Triple-click)

7. Point, click, release, click, drag, release (Double – drag)

1. Pointing This simple operation is a cornerstone of the graphical interface and is the basis for all move operations. The user moves the mouse until its corresponding on-screen cursor is pointing to, or placed over, the desired objects.

2. Clicking While the user holds the mouse in a steady position, he presses the button down and releases it. This is called clicking. In general, this action is defined as triggering a state change in a gizmo or selecting an object.

3. Clicking-and-dragging This versatile operation has many common uses including selecting, reshaping, repositioning, drawing, and dragging-and-dropping.

4. double-clicking Single click selects something, double-clicking selects something and then takes action on it.

5. chord-clicking It means pressing two buttons simultaneously, although they don’t have to be either pressed or released at precisely the same time. To qualify as a chord-click, the second mouse button must be pressed at some point before the first mouse button is released.

6.triple-clicking It means clicking three times continuously on something. This feature can be found even in very respectable programs. In a word, triple-click selects a paragraph.

7. Double-Drag It is used as a variant of selection extension. In other words, it is used as a selection tool. We can double click in the text to select on entire-word, so, expanding that function, we can extend the selection word-by-word by double-dragging

Up And Down Events

A mouse button press

1.Button-down event

Selection should occur on the button-down. The first step in dragging To drag, something must be selected first. Mouse button-down also deactivates selection (push button) For checkbox is shown activated visually but checked doesn’t appear until the button-up transition.

2.Button-up Event

Button-down means propose action, button-up means to commit to action over gizmos. If the cursor is positioned over a gizmo rather than selectable data, the action on the button-down event is to tentatively activate the gizmo’s state transition on the button-up event, gizmo commits to the state transition. Focus: Focus indicates which program will receive next input from the user. So, focus is a word that is associated with the user’s viewpoint. The active program has the most prominent caption bar. Only one program can receive focus at a time. Normally a program with the focus will receive the next keystroke. Normal keystroke has no location component, the focus can’t change because of it. Mouse button press has a location component and can change the focus as a side effect of its normal command. The mouse click that changes the focus is a new-focus click.

If we click the mouse somewhere in a window that already has the focus, the action is called in-focus click. Windows interprets new-focus clicks as in-focus clicks with some uniformity. e.g. if we change focus to word by clicking and dragging on its caption bar, word not only gets the focus but is repositioned, too. But if we change the focus to word by clicking on a document inside the word, word gets the focus, but the click is discarded. It is not also interpreted as an in-focus click within the document.

Cursor Hinting

Cursor Visible representation on the screen of the mouse’s position. By convention, it is a small arrow pointing slightly west of north. Inside the program, it can change to shape as long as it stays relatively small:32×32 pixels. One single pixel of any cursor is designed as the actual focus of pointing, called the hotspot. Any object or area on the screen that reacts to a mouse action is called pliant. The fact that objects are pliant must be communicated to the users. Hinting Three ways to communicate the pliancy of an object to the user.

1.By the static visual affordances of the object itself. Static visual hinting e.g. three-dimensional sculpting of a push button.

2.By its dynamically changing visual affordances.

3.By changing visual affordances of the cursor as it passes over the object

Active visual hinting

Some visual objects that are pliant are not so, either because they are too small or because they are hidden. e.g. scrollbars, status bars are not on the central area of the program’s face, so users may not understand that the objects are directly manipulable. There is less active visual hinting in the world of business and productivity software. It is too bad. The Edutainment field uses it often, and its software is better for it.

Cursor hinting

Indicating pliancy is the most important role of cursor hinting. All cursor hunting is active cursor hinting because the cursor is dynamically changing. Involves changing the cursor to some shape that indicates what type of direct manipulation is acceptable, its most important role is in making it clear to the user that the object is pliant. A most effective method for making data visually hint at its policy without disturbing its normal representation. The column divides and screen splitters in Excel are good examples.

Wait for cursor hinting

For some tasks, it may take significant amounts of time in human terms. The program cursor changes to a visual indication that the program has gone stupid. Wristwatches, spinning balls, steaming cups of coffee, etc. Informing the user when the program becomes stupid is a good idea, but the cursor isn’t the right tool for the job. Too bad the idiom has wide currency as a standard. The user interface problem is becoming the system cursor borrowed by a program.

In a non-preemptive system like windows 3.x, if a program gets stupid, all programs get stupid. In preemptive multi-tasking word of windows 95, when one program gets stupid, it won’t necessarily make other programs get stupid. The waiting cursor cannot be used to indicate a busy state for any single program. So, leaving this cursor, the program should make its indicator for this task.

Meta keys

• CONTROL key

• ALT key

• SHIFT key

Help to extend direct-manipulation idioms by their use in conjunction with the mouse. Meta-key cursor hinting As the meta keys go down, the cursor should change immediately to reflect the new intention of the idiom. ALT, CTRL KEY IN Excel.

Selection

There are only two things we can do with a mouse: choose something and choose something to do what we’ve chosen. These choosing actions are referred to as selection, and they have many nuances (slight difference) in meaning. Selection is a mechanism by which the user informs the program which objects to remember. Indicates which data the next function will operate on.

indicating selection

We purchase groceries by first selecting the objects. We place them in our shopping cart. We specify operations to execute on them by bringing the cart up to the checkout counter and expressing our desire to purchase. Selection is trivial. The user points to a data object with the mouse cursor, clicks, and the object is selected. We should visually indicate to the user when something is selected. The selection state must be easy to spot on a crowded screen, unambiguous, and must not obscure the object or what it is.

Word processors and spreadsheets almost always show black text on a white background, so it is reasonable to use the XOR inversion shortcut to show selection. When colors are used, the inversion still works but the results may be aesthetically (concerned with beauty and art) lacking. In a colored environment, the selection can get visually lost on any color we choose for selection. The solution is to instead highlight the selection with additional graphics that show its outline.

Selection

Discrete selection

1. Data is represented as a distinct visual object that can be manipulated independently of each other.

2. E.g. icons in program manager, graphic objects in draw programs.

3. These objects are discrete data.

4. Selection within discrete data is discrete selection.

Concrete selection

Data represented as a matrix of many little contiguous pieces of data. e.g. text in a word processor, cells in spreadsheets. These objects are often selected in solid groups, so are called concrete data. Selection within where data is concrete selection. Insertion and Replacement In discrete selection, one or more discrete objects are selected, the incoming data is handed to the selected discrete objects which process them in their way.

This may cause a replacement action where the incoming data replaces the selected object. Alternatively selected objects may treat the incoming data as fodder(an object with one use) for some standard function. In PowerPoint, for example, incoming keystrokes with a shape selected to result in a text annotation of the selected shape.

Concrete selection exhibits a unique peculiarity related to insertion, where the selection can shrink down to a single point that indicates a place in between two bits of data, rather than one or more bits of data. This in-between place is called the insertion point. Decide whether this condition is appropriate for our program. Some discrete systems allow a selected object to be deselected by clicking on it again.

Mutual Exclusive

When a selection is made, any previous selection is unmade. This behavior is called mutual exclusive as the selection of one excludes the selection of the other. An object becomes selected when the user clicks on the object. That object remains selected until the user selects something else. Mutual exclusive is the rule in both discrete and concrete selection.

Additive selection

If mutual exclusive is turned off in discrete selection, we have the simple case where many independent objects can be selected merely by clicking on more than one in turn. This is called additive selection. Most discrete selection systems implement mutual exclusive by default and allow additive selection only by using a meta-key. The SHIFT meta-key is used for this most frequently.

For example, in a drawing program, after we have clicked to select one graphical object, we typically can add another one to our selection by SHIFT-clicking. Concrete selection systems should never allow additive selection because there should never be more than a single selection in a concrete system. However, concrete selection systems do need to enable their single allowable selection to be extended, and again, meta-keys are used.

Group selection

The click-and-drag operation is also the basis for group selection. In a matrix of text or cells, it means “extend the selection” from the mouse-down point to the mouse-up point. This can also be modified with meta-keys. In a word, CTRL-click selects a complete sentence, so a CTRL drag extends the selection sentence-by-sentence. Sovereign applications should rightly enrich their interaction with as many of their variants as possible.

Gizmo Manipulation

It is a human-computer interaction style that involves the continuous representation of objects of interest and rapid, reversible, and incremental actions and feedback. It allows a user to manipulate objects presented to them, using actions that correspond at least loosely to manipulation of physical objects. e.g. repositioning, resizing and reshaping, etc.

Repositioning

It is the simple act of clicking on an object and dragging it to another location. It is a form of direct manipulation that takes place on a higher conceptual level than that occupied by the object. We are repositioning. It means to say that we are not manipulating some aspect of the object, but simply manipulating the placement of the object in space. This action consumes the click-and-drag action, making it unavailable for other purposes.

If the object is repositionable, the meaning of click-and-drag is taken and cannot be devoted to some other action within the object itself, like a button press. The most general solution to this conflict is to dedicate a specific physical area of the object to the repositioning function. e.g. we can reposition a window in windows or on the Macintosh by clicking-and-dragging its caption bar. The rest of the window is not pliant for repositioning, so the click-and-drag idiom is available for more application-specific functions.

Resizing And Reshaping

When referring to the “desktop” of windows and other similar GUIS, there isn’t any functional difference between resizing and reshaping. The user adjusts a rectangular window’s size and aspect ratio at the same time and with the same control by clicking-and-dragging on a dedicated gizmo. On the Macintosh, there is a special resizing control on each window in the lower right corner, frequently nestled into the space between the application’s vertical and horizontal scrollbars. Dragging this control allows the user to charge between the height and width of the rectangle.

Windows 3.x avoided this idiom in favor of a thick frame surrounding each window. The thick frame is an excellent solution. It offers both generous visual hinting and cursor hinting, so it is easily discovered. Its shortcoming is the amount of real estate it consumes. it may only be four or five pixels wide but multiply that by the sum of the lengths of the four sides of the windows, we’ll see that thick frames are expensive.

Resizing And Reshaping (Contd.)

Windows 95 introduced a new reshaping-resizing gizmo that is remarkably like the Macintosh’s lower-right-comer reshaper/resizer. The gizmo is a little triangle with 45 degree, 3D ribbing, called a shingle. The shingle still occupied a square of space on the window, but most windows 95 programs have a status bar of some sort across their bottoms, and the reshaperresizer borrows space from it rather than from the client area of the window.

Thick frames and shingles are fine for resizing windows, but when the object to be resized is a graphical element in a painting or drawing program, it is not acceptable to permanently superimpose controls onto it. A resizing idiom for graphical objects must be visually bold to differentiate itself from part of the drawing, of the user’s view of the object and the space it swims in. The resizer must not obscure the resizing action.There is a popular idiom that accomplishes their goals.

It consists of eight little black squares positioned one at each corner of a rectangular object and one centered on each side. These little black squares are often called little “handles”. It can also be called grapples(indicate selection) Resizing and Reshaping Meta-key Variants Meta-key used for resizing and reshaping is a SHIFT key. The function varies from program to program. In most applications, it is used to resize an object proportionally.

Visual feedback and manipulation

There must be rich visual feedback of a successful direct manipulation. Three phases of the direct-manipulation process. Free phase Before the user takes any action Our job to indicate direct manipulation pliancy Captive phase Once the user has begun the drag Two tasks

1. Positively indicate that the direct manipulation process has begun.

2. Visually identify the potential participants in the actions.

Termination phase

After the user releases the mouse button. Indicate to the user that the action has terminated and shown exactly what the result is. Depending on which direct manipulation phase we are in, there are two variants of cursor hinting. During the free phase, any visual change the cursor makes as it merely passes over something on the screen-free cursor hinting. Once the captive phase has begun, the changes to the cursor are captive cursor hinting.

Microsoft Word uses the clever free cursor hint of reversing the angle of the arrow when the cursor is to the left of the text to indicate that selection will be line-by-line or paragraph-by-paragraph instead of character-by-character as it normally is within the text itself.Microsoft is using captive cursor hinting more and more as it discovers usefulness. Dragging and dropping text in words or cells in Excel are accompanied by cursor changes indicating precisely what the action is and whether the objects are being moved or copied.

When something is dragging, the cursor must drag either the thing or some simulacrum(copy) of that thing.in a dragging program. For example, when we drag a complex visual element from one position to another, it may be too difficult for the program to actually drag the image(due to performance limitation), so it often just drags an outline of the object.

Drag and Drop

Any mouse action is very efficient because it combines two command components in a single user action: 1. A geographical location 2. A specific function Drag and Drop is doubly efficient because, in a single, smooth action, it adds a second geographical location. Although drag and drop were accepted as a cornerstone of the modern GUI, it is found so rarely used outside of drawings and paintings programs.

This seems to be changing and more programs add this idiom. Drag and Drop are defined as “clicking on some object and moving it elsewhere”-repositioning. More accurate description- “clicking on some object and moving it to imply a transformation ” The Macintosh was the first successful system to offer drag-and-drop

Source and Target

Drag and Drop from one place to another

1. Inside a program(interior drag-and-drop)

2. One program to another(external drag-and-drop) More sophisticated

When the user clicks on a discrete object and drags it to another discrete object to perform a function, it is called master-and-target. The master object is the object within which dragging originates and controls the entire process. It is a window. If we are dragging a window, that icon is a window. If we are dragging a paragraph of text, the enclosing editor is the window. When the user ultimately releases the mouse button, whatever was dragging is dropped on some target-object. How master-and-target works? A well-designed master object will visually hint at its pliancy, either statically in the way it is drawn, or actively, by animating as the cursor passes over it.

The idea that an object is draggable is easily learned idiomatically. It is difficult to forget that an icon, selected text, or other distinct object is directly manipulable, once the user has been shown this. He may forget the details of the action, so other feedback forms are very important after the user clicks on the object, but the fact of direct-manipulation pliancy itself is easy to remember. The first-timer or very infrequent user will probably require some additional help. This help will come either through additional training programs or by advice built right into the interface.

In general,

a program with a forgive interaction encourages users to try direct manipulation on various objects in the program. As soon as the user presses the mouse button over on the object, that object becomes the master object for the duration of the drag-and-drop. On the other hand, there is no corresponding target object because the mouse-up point hasn’t yet been determined.it could be on another object or in the open space between objects. However, as the user moves the mouse around with the button held down-remember, this is called the captive phase. the cursor may pass over a variety of objects inside or outside the master object’s program.

If these objects are drag-and-drop compliant, they are possible targets, and I call them to drag candidates. There can only be one master and one target in drag, but there may be many drop candidates. Depending on the drag-and-drop protocol, the dropped candidate may not know how to accept the particular dropped value, it just has to know how to accept the offered drop protocol.

Other protocols may require that the dropped candidate recognize immediately whether it can do anything useful with the offered master object. The latter method is slower but offers much better feedback to the user. Remember, this operation is under direct human control, and the master object may pass quickly over dozens of drop candidates before the user positions it over the desired one. If the protocol requires extensive conversing between the master object and each drop candidate, the interaction can be sluggish, at which point it isn’t worth the game.

Visual Indications

The only task of each drop candidate is to visually indicate that the hotspot of the captive cursor is over it, meaning that it will accept the drop – or at least comprehend it – if the user releases the mouse button. Such an indication is active visual hinting.

Indicating drag pliancy

Once the master object is picked up and the drag operation begins, there must be some visual indication of this. The most visually rich method is to fully animate the drag operation, showing the entire matter moving in real time.This method is hard to implement, can be annoyingly slow and very probably isn’t the proper solution. The problem is that a master-and-target operation requires a pretty precise pointer. For example, the master object may be 6cms square, but it must be dropped on a target that is 1cm square.

The master object must not obscure the target and because the master object is big enough to span multiple drop candidates, we need to use a cursor hotspot to precisely indicate which candidate it will be dropped on. What this means is that, in matter-and-target, dragging a transparent outline of the object may be much better than actually dragging a fully animated, exact image of the master object. It also means that the dragged object can’t obscure the normal arrow cursor either. The tip of the arrow is needed to indicate the exact hotspot.

Indicating drop candidacy

As the cursor traverses the screen, carrying with it an outline of the master object, it passes over the drop candidate after another. The drop candidates must visually indicate that they are aware of being considered as potential drop targets. By visually changing the drop candidate alerts the user that it can do something constructive with the dropped object.

A point so obvious as to be difficult to see is that the only objects that can be dropped candidates are those that are currently visible. A running application doesn’t have to worry about visually indicating its readiness to be a target if it isn’t visible. Internally, the master object should be communicating with each drop candidate as it passes over it. A brief conversation should occur, where the master asks the target whether it can accept a drop. If it can, the target indicates it with visual hinting.

Completing the drag-and-drop operation

When the master object is fully dropped on a drop candidate, the candidate becomes a real target. At this point, the master and target must engage in a more detailed conversation than the brief one that occurred between the master and all of the other drop candidates. After all, the user has committed and we now know the target. The target may know how to accept the drop, but that doesn’t necessarily mean that it can swallow the particular master object dropped in this specified operation.

It is better to indicate and choke on the actual drop than it is to not indicate drapability. If the drag-and-drop is negotiated, the format of the transfer remains to be resolved. If information is transferred, the master-and-target may wish to negotiate whether the transfer will be in some proprietary format known to both, or whether the data will have to be reduced in resolution to some [ ] but more common format like ASCII text.

Visual Indication of completion

If the target and the master can agree, the appropriate operation then takes place. A vital step at this point is the visual indication that the operation has occurred. If the operation is a transfer, the master object must disappear from its source and reappear in the target. If the target represents a function rather than a container (such as a print icon), the icon must visually hint that it received the drop and is now printing. We can do this with an animation or by changing its visual state.

Tool manipulation

drag-and-drop In drawing and painting programs, the user manipulates tools with drag-and-drop, where a tool or shape is dragged into a canvas and used as a drawing tool. There are two basic variants of this that are called modal tools and charged cursors.

Modal tool

In modal tool, the user selects a tool from a list, usually called a toolbox or palette. The program is now completely in the mode of that tool: it will only do that one tool’s job. The cursor usually changes to indicate the activate tool. Modal tool works for both tools that perform actions on drawinglike and easier- or for shapes that can be drawn-like ellipses. The cursor can become an eraser tool and erase anything previously entered or it can become an ellipse tool and draw any number of new ellipses.

Charged Cursor

It is the second tool-manipulation drag-and-drop technique. With a charged cursor, the user again selects a tool or shape from a palette, but this time the cursor, rather than becoming an object of the selected type, becomes loaded-or charge-with a single instance of the selected object. When the user clicks once on the drawing surface, an instance of the object is created-dropped on the surface at the mouse up point. Powerpoint uses it extensively. The user selects a rectangle from the graphics palette and the cursor then becomes a modal rectangle tool charged with exactly one rectangle.

Bomb Sighting

As the user drags a master object around the screen, each drop candidate visually changes as it is pointed to, which indicates its ability to accept the drop. In some programs, the master object can instead be dropped in the spaces between other objects. This is called a variant of drag-and drop bombardier. Dragging text in word, for example, is a bombardier operation, as are most rearranging operations. The vital visual feedback of bombardier drag-and-drop is showing where the master object will fall if the user releases the mouse button.

In master-and-target, the drop candidate becomes visually highlighted to indicate the potential drop, but in bombardier, the potential drop will be in some space where there is no object at all. The visual hinting is something drawn on the background of the program or in its concrete data. This visual hint is called bombsight. e.g. rearranging slides in powerpoint.

Drag and Drop Problems and solutions

When we are first exposed to the drag-and-drop idiom, it seems pretty simple, but for frequent users and in some special conditions, it can exhibit problems and difficulties that are not so simple. As usual, the iterative refinement process of software design has exposed these shortcomings, and in the spirit of invention, clever designers have devised equally clever solutions.

Autoscroll

When the selected object is dragged beyond the border of the enclosing application rectangle, the program should make the interpretation that the object is being dragged to a new position and whether the new position is inside or outside of the enclosing rectangle. In Microsoft Word , when a piece of selected text is dragged outside the visible text window, what is the drag intended for?

The user wants to put that piece of text into another program. Or the user wants to put that text somewhere else in the same document but that place is currently scrolled off the screen. For the first case, things are easy. But for the second case, the application must scroll in the direction of the drag to reposition the selection at a distant not-currently-visible location in the same document. This scrolling is called autoscroll. Auto Scroll is something very important added to drag-and drop.

Wherever the drop target can possibly be scrolled off screen, the program requires autoscroll. There must be some time delay in autoscrolling. The autoscrolling should only begin after the drag cursor has been in the auto-scroll zone for some reasonable time cushion – about a half-second. Avoiding drag-and-drop twitchiness (sudden quick movement) When an object can be either selected or dragged, it is vital that the mouse be biased towards the selection operation. Because it is so difficult to click on something without inadvertently moving the cursor a pixel or two, the frequent act of selecting something must not accidentally cause the program to misinterpret the action as the beginning of a drag-and-drop operation.

AutoScroll (contd.)

The user rarely wants to drag an object one or two pixels across the screen. The time it takes to perform a drag is usually much greater than the time it takes to perform a selection, and the drag is often accompanied by a repaint, so objects on the screen will flash and flicker. This is very disturbing to users who are expecting a simple selection. Additionally the object is now displaced by a couple of pixels. The user probably had the object just where he wanted it, so having it displayed by even one pixel will not please him.

And to fix it, he’ll have to drag the object one pixel, a very demanding operation. Besides in the hardware world, controls like push buttons that have mechanical contacts can exhibit what is known as “Bounce”. This situation is also analogous to the over sensitive mouse problem and the solution is to copy switch makers and debounce the mouse. So the program should establish a drag threshold to avoid such situations. Essentially all mouse-movement messages that arrive after the mouse button goes down and capture begins are ignored unless the movement exceeds some small threshold amount, say three pixels.

![]()

Mouse Vernier

The weakness of the mouse as a precision pointing tool is readily apparent, particularly when dragging objects around in drawing programs. It is hard to drag something to the exact desired spot, especially when the screen resolution is 100 or more pixels per-inch and the mouse is running at a six-to-one ratio to the screen. To move the cursor one pixel, we have to move the mouse precisely one six-hundredth of an inch. Not easy to do.

During a drag, if the user decides that he needs more precise alignment, he can change the ratio of the mouse’s movement relative to the object’s movement on the screen. Any program that might demand precise alignment must offer a Vernier facility. This includes minimum all drawing and painting programs, presentation programs etc.There are several acceptable variants of this idiom.

In Vernier mode, each ten pixels of mouse movement would be interpreted as a single pixel of object movement. Any program that demands precise alignment must offer a Vernier